That sound you heard yesterday was the “splat” when the Internet ran straight into the wall of Gall’s Law.

Amazon Web Services experienced a rather large glitch on Tuesday, and seemingly half the Internet glitched with it. Amazon is, so far, being very circumspect in explaining why, but you can be sure it’s probably some simple thing that quickly got very complex.

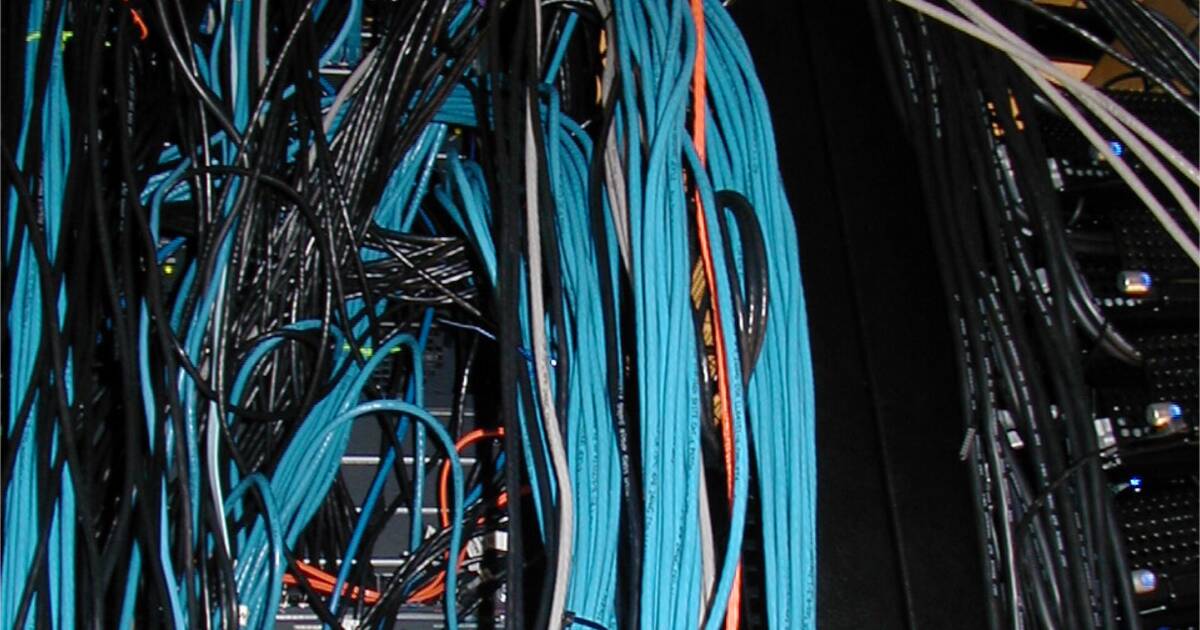

The company’s service health dashboard blamed “the root cause of this issue is an impairment of several network devices.” What network devices? How did they fail? What backups were in place to deal with this failure? Nobody’s saying much so far.

At 2:04PM PST, Amazon posted, “We have executed a mitigation which is showing significant recovery in the US-EAST-1 Region.” What mitigation? I understand that companies in the midst of a network crisis don’t want to spill all the technical details of what they’re doing, but this is really bare bones stuff. I can also say that the worst nightmare for a network manager is not having to solve a massive failure, but dealing with the “suits” hot breath as management demands updates. I wish I had a dime for every time I have heard (and myself uttered) “Do you want me to solve the problem, or tell you how I’m going to solve it? I can’t do both.”

As of right now (December 8th, early morning), everything appears to be back to “normal” for AWS, according to the health dashboard. What we don’t know is how things are behind the scenes. Is everything held together with spit, glue, gum, temporary patch cables, and boxes of “hot spare” equipment that is subject to the same failure mode that took everything down yesterday? We don’t know, and Amazon isn’t saying.

If this problem only affected Amazon, I’d be like, whatever. But AWS powers a LOT of the Internet. Everything from the iRobot floor cleaner in my kitchen, to Delta Air Lines reservations, to McDonalds, to streaming sites like Roku and Disney+, to a panoply of e-commerce sites such as Instacart and Venmo were off the air because they rely on AWS for their core functionality or user interface.

The AP wondered out loud if the U.S. government was affected. I’d say maybe, for low priority stuff. Interestingly, Amazon, on the day of the outage, announced a new “top secret” western AWS region specifically devoted to the U.S. government. I’m not implying that the outage and the new service are in any way related, but it is a fascinating coincidence.

This isn’t the first AWS major outage. In fact, there’s a website specifically designed to track them (there’s a website for everything). The site features a timeline and post-mortem for each event. For example, the entry for a September 2 failure in the Tokyo region reads: “Real reason was hidden much deeper: engineers suspected that the failure may be related to a new protocol that was introduced to optimize the network’s reaction time to infrequent network convergence events and fiber cuts.” By trying to create more infrastructure to “self heal” physical and logical network loss, Amazon engineers outsmarted themselves and created a bigger outage.

There’s a rule of thumb that deals with this kind of large scale complexity. It’s called “Gall’s Law.”

A complex system that works is invariably found to have evolved from a simple system that worked. A complex system designed from scratch never works and cannot be patched up to make it work. You have to start over with a working simple system.

John Gall articulated this in his book “Systemantics: The Systems Bible” which covers ways of communicating ideas and building complex systems. You can buy the book on Amazon (if you can get there).

The Internet, at heart, has always been a collection of rather simple systems, layered together in a way that builds complexity. I won’t explain things like the OSI model here, because that’s really in the weeds. However, the interplay between hardware, transport, data, all the way up to your screen, mouse and keyboard (presentation to the user), at scale, creates impossibly complex systems that cannot be predicted to do what you think (or a building full of engineers in Seattle think) they will do under variable circumstances.

When Facebook went down on October 4, Meta engineer and VP of infrastructure Santosh Janardhan posted word salad on the company’s engineering blog:

Our engineering teams have learned that configuration changes on the backbone routers that coordinate network traffic between our data centers caused issues that interrupted this communication. This disruption to network traffic had a cascading effect on the way our data centers communicate, bringing our services to a halt.

Invariably, massive failures tend to start with network devices misbehaving in unanticipated ways, or with operating systems on servers propagating “cascading” events in an uncontrollable fashion. Typically, the only way to deal with cascading failures is to shut everything down piece by piece and turn it back on. This is fairly simple if you run a network shop with 10 servers and 50 workstations. Doing it on a global platform with tens of thousands of services, virtual servers, and interconnected APIs, hardware, protocols, and supervisory systems, takes hours.

The problem with this complexity is that AWS powers or touches nearly a third of the online services we use every day. This led Quartz to a breathless article titled “A total Amazon cloud outage would be the closest thing to the world going offline.” I suppose, if all of Amazon did go down (all regions, worldwide), for a very extended time, this could be very, well, inconvenient, and many people would just take a vacation day. Maybe the world needs a day offline?

The centralization of a majority Internet services among Meta, Google, AWS and Microsoft is good for efficiency and cost, but really bad for complexity and cascading failure. The Internet is simply not mature enough to deal with these failures on a massive scale, and Gall’s Law demands simplicity.

Compare this with our electric grid, which has been maturing for at least 100 years. The North American Electric Reliability Corporation, known as NERC, stitches together six regional entities that govern how our electric grid is to be run in a reliable way. Their goal is to avoid cascading, massive failures that lead to widespread blackouts. NERC sets rules and guidelines for everything, from the materials used in manufacturing transformers, to the wire gauge required in a residential distribution line. It also sets standards for SCADA (the supervisory systems that run the grid) systems, and the computers and devices, down to the chip level, that run everything.

NERC is much more mature, and covers a giant footprint compared to the fractured and freewheeling Internet. Sure, there are standards (in the industry, called RFCs). These are run by the Internet Engineering Task Force, aka the IETF(technically, the IETF Administration LLC). Cooperation by companies like Google or AWS with the IETF is strictly voluntary. The only means of enforcement available on Internet standards is consumer outrage at giant outages and continual problems.

On the government side, there’s the U.S. Cyber Command (military), and its civilian counterpart, the Cybersecurity & Infrastructure Security Agency, known as CISA. But these organizations generally govern security and privacy concerns. There is some attention given to reliability, but government regulation is almost certainly not the answer. Regulatory moats tend to stifle competition and innovation, and do not lend themselves to natural maturation of the industry. Rather, it would freeze things in place, which in a way promotes reliability—if nobody is doing anything particularly new, complex systems tend to keep running.

What the Internet needs is more maturity, and perhaps a little more wisdom from companies rushing to host their services with one of the big tech leviathans. Anyone can buy a multi-gigabit fiber connection these days, and setting up a few servers in different parts of the country without relying on AWS or Azure isn’t necessarily a bad thing. That way, when the cascade ripples through, customers are barely affected.

For now and the foreseeable future though, I think we’re going to hear that “splat” sound pretty regularly. Complex systems break, and when they reach the level of complexity of AWS, Azure, Google, or Meta, Gall’s Law becomes a very tall wall to climb, and a hard wall when systems fly at it and go splat.

So when you hear it, enjoy your day offline and go get a latte.

Follow Steve on Twitter @stevengberman.

The First TV contributor network is a place for vibrant thought and ideas. Opinions expressed here do not necessarily reflect those of The First or The First TV. We want to foster dialogue, create conversation, and debate ideas. See something you like or don’t like? Reach out to the author or to us at ideas@thefirsttv.com.